The "Machine Learning with Python" program offers a hands-on approach to learning machine learning concepts using Python. Participants will master key algorithms, data preprocessing, model evaluation, and deployment techniques with popular libraries such as Scikit-learn, TensorFlow, and Keras.

Program Overview:

The Machine Learning with Python program is designed for individuals looking to build a solid foundation in machine learning (ML) and apply it using Python, one of the most widely used programming languages in the data science field. This program covers the essentials of ML, from data preprocessing to advanced algorithm implementation. Participants will explore supervised and unsupervised learning algorithms, including linear regression, decision trees, k-means clustering, and support vector machines. You'll also learn how to implement deep learning models using TensorFlow and Keras. Throughout the program, you'll get hands-on experience in building, training, and evaluating machine learning models, along with techniques for handling real-world data such as feature scaling, data imputation, and data augmentation. Additionally, the program will teach how to fine-tune models, assess their performance, and deploy them in production. By the end of the course, learners will be able to solve complex ML problems in domains like finance, healthcare, and marketing.

Program Structure:

The Machine Learning with Python program is structured to offer both theoretical knowledge and practical experience in building machine learning models. The course begins with an introduction to Python for Data Science, covering libraries like NumPy, Pandas, and Matplotlib for data manipulation and visualization. The next module focuses on Data Preprocessing, where learners will gain hands-on skills in preparing datasets for machine learning, handling missing data, feature scaling, and encoding categorical variables. The following modules cover Supervised Learning (including regression and classification algorithms like decision trees, random forests, and support vector machines), as well as Unsupervised Learning (like k-means clustering and hierarchical clustering). Later, learners will dive into Deep Learning, learning how to create neural networks with TensorFlow and Keras. Finally, the program explores Model Evaluation and Tuning, teaching techniques such as cross-validation, hyperparameter tuning, and model optimization. The course wraps up with a Capstone Project, where participants apply everything they've learned to solve a real-world problem.

Skills that You Master:

By completing the Machine Learning with Python program, you will gain proficiency in a range of essential machine learning and Python-related skills:

- Data Preprocessing: Learn to clean and preprocess raw data by handling missing values, normalizing data, encoding categorical features, and transforming variables.

- Supervised Learning: Master algorithms like Linear Regression, Logistic Regression, Decision Trees, Random Forests, and Support Vector Machines (SVMs) for classification and regression tasks.

- Unsupervised Learning: Understand clustering algorithms such as K-Means, Hierarchical Clustering, and Principal Component Analysis (PCA) for dimensionality reduction and pattern recognition.

- Deep Learning: Gain hands-on experience building neural networks using TensorFlow and Keras for tasks like image and text classification.

- Model Evaluation and Tuning: Learn how to evaluate model performance using metrics like accuracy, precision, recall, F1 score, and AUC. Master techniques such as cross-validation, hyperparameter tuning, and grid search to optimize model performance.

- Deployment: Understand how to deploy machine learning models into production using tools like Flask, Docker, and cloud services.

These skills will enable you to build, evaluate, and deploy machine learning models to solve real-world business problems.

Who Should Enroll:

The Machine Learning with Python program is ideal for software developers, data analysts, and aspiring data scientists with a strong background in programming and mathematics. It's well-suited for individuals who want to learn how to apply machine learning algorithms in Python to solve real-world problems in areas like finance, marketing, healthcare, and more. Prior experience with Python is recommended.

- Teacher: ADG User

Learning Spark's GraphX provides an in-depth exploration of Apache Spark's graph processing library. This program teaches how to manipulate and analyze graph data at scale, leveraging GraphX’s powerful algorithms for graph-based analysis and machine learning applications.

Program Overview:

The Learning Spark's GraphX program focuses on Apache Spark's powerful graph processing component, GraphX, which is designed to process large-scale graph data efficiently. GraphX is an essential tool for analyzing relationships between entities, making it particularly useful for applications like social network analysis, recommendation systems, fraud detection, and more. This program provides learners with a deep understanding of graph theory concepts, the inner workings of GraphX, and how to utilize it for real-world data analysis. The curriculum includes topics such as creating and manipulating graphs, performing graph-based transformations, and using GraphX’s built-in algorithms like PageRank, Connected Components, and Triangle Counting. Through hands-on exercises, participants will learn how to build scalable graph processing workflows and integrate them with Spark’s other data processing capabilities. By the end of the program, learners will be able to work with large graph datasets and implement advanced graph algorithms to gain insights from complex relationships in data.

Program Structure:

The Learning Spark's GraphX program is structured into several modules, beginning with an introduction to graph theory and its applications in data analysis. The first module covers the foundational concepts of graphs, vertices, and edges, as well as key graph processing terminology. The next modules focus on Spark’s architecture and the integration of GraphX with the broader Spark ecosystem. Learners will explore how to create, store, and manipulate graph data using GraphX’s RDD (Resilient Distributed Datasets) and DataFrame APIs.

Key topics include:

- Graph Creation and Manipulation: Learn how to build graphs from structured and unstructured data and perform common graph transformations.

- Graph Algorithms: Deep dive into popular algorithms such as PageRank, connected components, and graph traversal techniques.

- Graph Analytics: Learn to analyze graph data for insights, such as community detection, anomaly detection, and pathfinding.

- Optimization Techniques: Understand performance optimization strategies when working with large-scale graphs.

The program concludes with a hands-on project where learners can apply GraphX to solve real-world problems.

Skills that You Master:

By completing the Learning Spark's GraphX program, you will master the following skills:

- Graph Theory Fundamentals: Understand basic concepts of graph theory, including vertices, edges, directed/undirected graphs, and graph traversals, and how these concepts are applied in data science.

- GraphX APIs: Gain proficiency in working with GraphX’s RDDs and DataFrames, learning how to create, manipulate, and store graph data at scale.

- Graph Algorithms: Master key graph algorithms, including:

- PageRank for ranking vertices in a graph.

- Connected Components for identifying clusters in graphs.

- Triangle Counting for detecting potential relationships in data.

- Shortest Path and Breadth-First Search for pathfinding and graph traversal.

- Big Data Processing: Learn how to scale graph operations on distributed data using Apache Spark, enabling efficient graph processing across clusters.

- Graph-Based Machine Learning: Apply machine learning techniques to graph data, building recommendation systems, social network analysis models, and fraud detection tools.

These skills will empower you to apply graph-based analysis to complex data structures and solve large-scale problems in industries like social media, finance, and telecommunications.

Who Should Enroll:

The Learning Spark's GraphX program is ideal for data engineers, data scientists, and software developers with a background in programming and big data processing. It’s suited for professionals working with graph-based data or those looking to integrate graph analytics into their existing Spark workflows. Prior experience with Spark and basic knowledge of graph theory is recommended.

- Teacher: ADG User

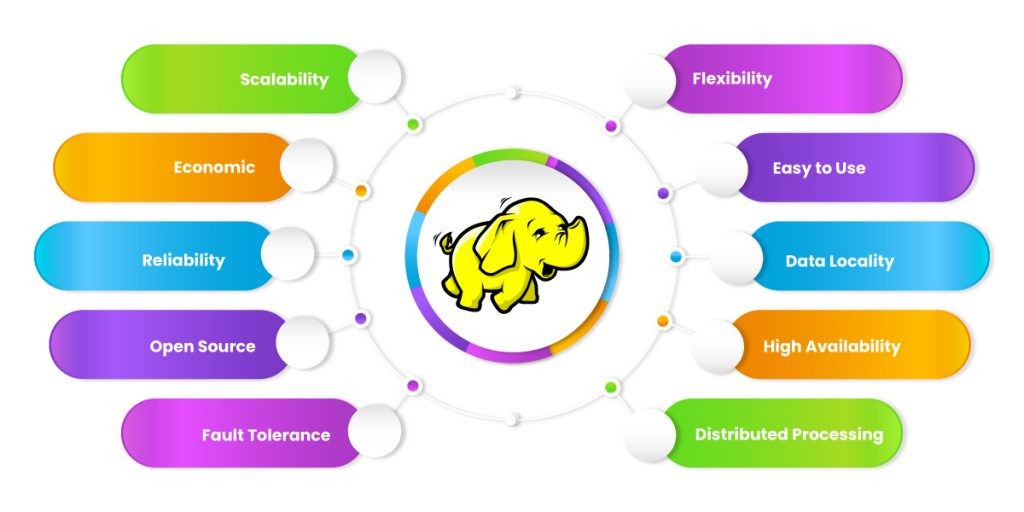

The Hadoop Framework program offers in-depth training on Apache Hadoop, the leading platform for processing and analyzing large-scale data. Learn how to work with Hadoop's distributed storage and computing capabilities, including HDFS, MapReduce, YARN, and related tools.

Program Overview:

The Hadoop Framework program is designed to provide comprehensive knowledge of Apache Hadoop, an open-source software framework that allows the distributed processing of large datasets across clusters of computers. This program covers key components of the Hadoop ecosystem, including the Hadoop Distributed File System (HDFS), MapReduce for batch processing, YARN for resource management, and other essential tools like Hive, Pig, and HBase. Participants will gain hands-on experience with setting up, configuring, and optimizing a Hadoop cluster, as well as running real-world big data analytics workloads. The curriculum is structured to help learners understand both the theoretical and practical aspects of Hadoop, including data storage, parallel computing, and job scheduling. By the end of the program, participants will be able to handle large-scale data processing tasks and integrate Hadoop with other big data tools to build scalable, efficient data solutions.

Program Structure:

The Hadoop Framework program is divided into several modules, each focusing on a core aspect of the Hadoop ecosystem. It starts with an introduction to big data and the challenges of processing and storing large volumes of data. The first module covers Hadoop architecture, including the fundamentals of HDFS (Hadoop Distributed File System) and how data is stored and managed across multiple nodes in a cluster. The next modules dive into MapReduce programming, which allows the distribution of processing tasks across nodes, and YARN (Yet Another Resource Negotiator), responsible for resource management and job scheduling. Further topics include Hive (for data warehousing), Pig (for data flow scripting), HBase (NoSQL database for real-time read/write operations), and Sqoop (for importing/exporting data from relational databases). Throughout the program, learners will complete hands-on exercises and case studies to understand how Hadoop can be used to process and analyze big data efficiently.

Skills that You Master:

Upon completing the Hadoop Framework program, you will master the following skills:

- Hadoop Distributed File System (HDFS): Learn how to store and manage large datasets across distributed nodes in a scalable and fault-tolerant manner.

- MapReduce: Understand how to use MapReduce programming for parallel data processing tasks, breaking large problems into smaller, manageable parts for efficient computation.

- YARN: Master resource management and job scheduling in a Hadoop cluster using YARN, ensuring optimized use of computational resources.

- Data Processing with Hive & Pig: Gain expertise in querying and processing large datasets using Hive’s SQL-like syntax and Pig’s high-level scripting language.

- NoSQL with HBase: Learn how to set up and use HBase, a distributed NoSQL database, for handling real-time read/write operations on massive datasets.

- Integration with Other Big Data Tools: Learn how Hadoop integrates with other tools in the big data ecosystem, including Spark, Flume, and Sqoop, to enhance processing and analysis.

By the end, you will be proficient in setting up and managing Hadoop clusters and using the ecosystem to handle large-scale data processing tasks.

Who Should Enroll:

This program is ideal for IT professionals, data engineers, software developers, and business analysts looking to enhance their big data skills. It’s suited for individuals with basic programming knowledge who want to dive into big data processing, storage, and analytics using Hadoop. Anyone involved in managing large datasets or working with data infrastructure will benefit from this program.

- Teacher: ADG User

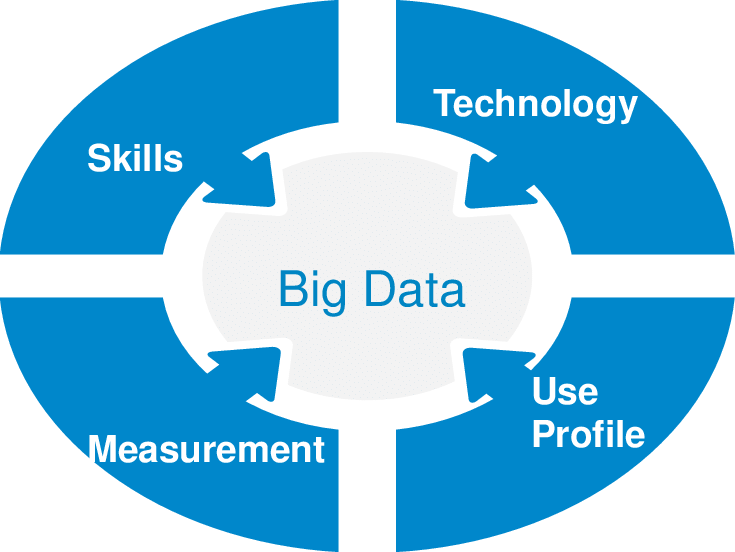

The program on Big Data Fundamentals provides an introduction to the core concepts, technologies, and tools used to process and analyze large-scale data. Participants will learn about distributed computing, big data frameworks, data storage, and data processing with tools like Hadoop and Spark.

Program Overview:

The Big Data Fundamentals program offers a comprehensive introduction to the principles and tools used to manage and analyze large-scale datasets, which are crucial in today’s data-driven world. Participants will explore the characteristics of big data, including volume, velocity, variety, and veracity, and how they impact data processing and analysis. The program covers key concepts in distributed computing and introduces popular big data frameworks such as Apache Hadoop and Apache Spark. Participants will gain practical experience working with the Hadoop Distributed File System (HDFS) for storing large datasets and MapReduce for processing them in parallel across clusters. In addition, learners will be introduced to Spark, a faster alternative to Hadoop for large-scale data processing, and other tools like Hive for querying data, Pig for data flow scripting, and HBase for real-time NoSQL database management. By the end of the program, participants will understand how to handle, store, and process big data efficiently and effectively.

Program Structure :

The Big Data Fundamentals program is structured into multiple modules, each focused on an essential aspect of big data technology. The program starts with an introduction to Big Data concepts, where learners will explore the defining characteristics of big data, its challenges, and opportunities. In the next modules, learners will be introduced to the Hadoop Ecosystem, beginning with the Hadoop Distributed File System (HDFS) and understanding how it enables fault-tolerant, distributed data storage. The program then delves into MapReduce, teaching how to write and execute MapReduce programs to process data in parallel across distributed systems. Next, the focus shifts to Apache Spark, covering its features, programming models, and how it improves on Hadoop for speed and scalability. Additional modules cover Hive for SQL-like queries on large datasets, Pig for high-level data scripting, and HBase for NoSQL databases. Hands-on projects, assignments, and real-world use cases help participants understand how to implement these technologies in practical settings.

Skills that You Master:

Upon completing the Big Data Fundamentals program, you will acquire the following key skills:

- Big Data Concepts: Understand the core characteristics of big data, including volume, variety, velocity, and veracity, and how they impact data processing strategies.

- Hadoop Ecosystem: Learn to work with HDFS for distributed storage and understand the architecture behind MapReduce, enabling you to process large datasets across multiple nodes in parallel.

- Apache Spark: Gain hands-on experience with Spark, learning how it differs from Hadoop and why it's faster and more efficient for data processing.

- Hive: Learn to query large datasets using Hive, a data warehouse infrastructure built on top of Hadoop that enables SQL-like queries on big data.

- Pig: Master Pig, a high-level platform for creating MapReduce programs with simpler scripting, making data processing easier and faster.

- HBase: Understand how to use HBase, a distributed NoSQL database for real-time data storage and retrieval in big data environments.

These skills will allow you to handle, process, and analyze large datasets in a distributed computing environment, preparing you for roles in big data analytics and engineering.

Who Should Enroll:

The Big Data Fundamentals program is ideal for aspiring data engineers, analysts, and IT professionals who want to understand the core principles and technologies of big data. It's suitable for individuals with a basic knowledge of programming and data analysis who are looking to expand their skillset into big data processing and storage frameworks like Hadoop and Spark.

- Teacher: ADG User